Microsoft reveals their DirectX Raytracing (DXR) for DirectX 12

Microsoft reveals their DirectX Raytracing (DXR) for DirectX 12

Raytracing is what is currently used to render high-end animated movies, taking offline server farms considerable amounts of time to create single frames for the latest Disney/Pixar movies, leaving it as something that is often considered out of reach for real-time game rendering. Â

Today 3D games use rasterisation to create images, offering considerable advantages when it comes to raw speed. Simplifying the situation a little, Rasterisation is not capable of simulating light correctly, whereas Raytracing is designed to accurately represent light from the eye/viewport outwards, allowing reflections, shadows, refraction and other advanced optical effects to be showcased.Â

Microsoft’s DirectX Raytracing isn’t meant to go all-in on the technology but act as a standard API which will allow both hardware and software to transition to a real-time ray-traced future. DXR is designed to use software and hardware acceleration to introduce ray-traced elements, instead of replacing Rasterisation in one fell swoop.Â

DXR is designed to work on today’s GPU hardware, offering a fallback layer which will allow developers to get started today on Raytraced content on DirectX 12, though it is worth noting that this method will be slow to render until newer hardware is available that offers hardware-level acceleration. Nvidia’s Volta graphics are said to deliver Hardware and software support for DirectX Raytracing, with older cards only providing software support.Â

Both AMD and Nvidia graphics will support DXR, though at this time it is unknown whether or not AMD/Radeon offers any form of hardware acceleration. It is likely that Raytracing won’t be worthwhile in a computational sense for a while, though this API paves the way towards a Raytraced future. Our theory is that Nvidia could be using Volta’s Tensor cores to offer Raytracing acceleration, as they have showcased AI-powered raytracing optimisation before for OptiX. Does this mean that Tensor cores are coming to gaming GPUs outside of the Titan V?

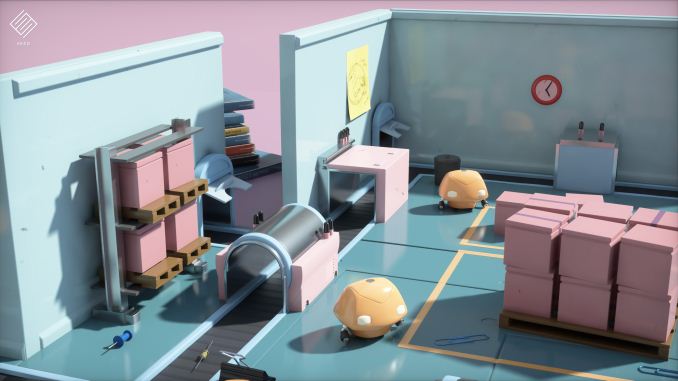

(A Ray Traced Example image from SEED, an in-production Engine from EA)

With DXR, Microsoft is offering a vital stepping stone towards real-time ray tracing, a step which game developers seem more than willing to take. So far a total of five game engines have confirmed their plans to support DXR, including the Frostbyte Engine (EA/Dice), SEED engine (EA, in-development), 3DMARK (Futuremark), Unreal Engine 4 (Epic Games) and Unity (Unity Technologies). Microsoft says that they have other partners that they cannot disclose at this time.Â

The question today is how far will developers go to support this feature, as the function does not extend outside of the Xbox One and Windows 10 given DirectX 12’s limitations. Even so, this will offer something new for hardware manufacturers to strive towards, opening up a new corridor for AMD and Nvidia to compete with each other. It’s now a matter of time before we see a shipping game with raytraced elements.Â

You can join the discussion on DirectX 12’s new Raytracing extension on the OC3D Forums. Â