What are Intel IPUs and why do they matter?

Intel Discusses their Infrastructure Processing Unit Plans

In recent months Intel has been talking a lot about their new IPU (Infrastructure Processing Unit) product lines, which has lead many hardware enthusiasts to wonder, “What is an IPU” and “how will IPUs affect me?”. In this article, we will briefly explain what Intel’s IPUs are, and how they will impact the future of the cloud.Â

Networking Evolved

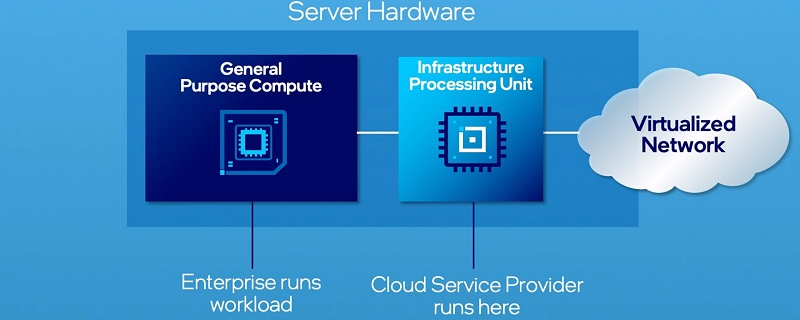

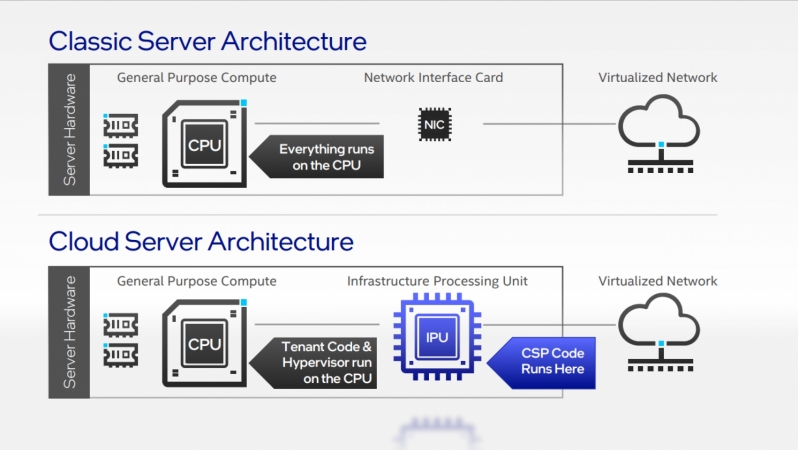

Standard server architectures rely on their processors to deliver general-purpose compute, doing everything that a system needs to do to continue operating. Everything needs to run on the server’s processor, and as the cloud has evolved, CPUs have become increasingly bogged down with networking loads.Â

While bigger and better processors can be used to alleviate these concerns, brute force is rarely the best way to tackle a problem, especially when networking traffic is expected to evolve further. The smart solution is to offload work from a server’s central processing unit, allowing it to do more useful work for users. For servers that are rented out to clients, this allows for more useful performance to be delivered to users and for security to be improved in a number of ways.Â

By moving compute to networking solutions, what Intel’s calling an Infrastructure Processing Unit (IPU), Intel will help cloud providers and hyperscalers to gain access to more compute performance from their processors and increase the efficiency of their hardware solutions.Â

Intel’s solution will enable infrastructure acceleration using programmable software and hardware that can cater to the needs of individual clients while acting as a common framework for a variety of networking demands.Â

Â

Â Â

Â

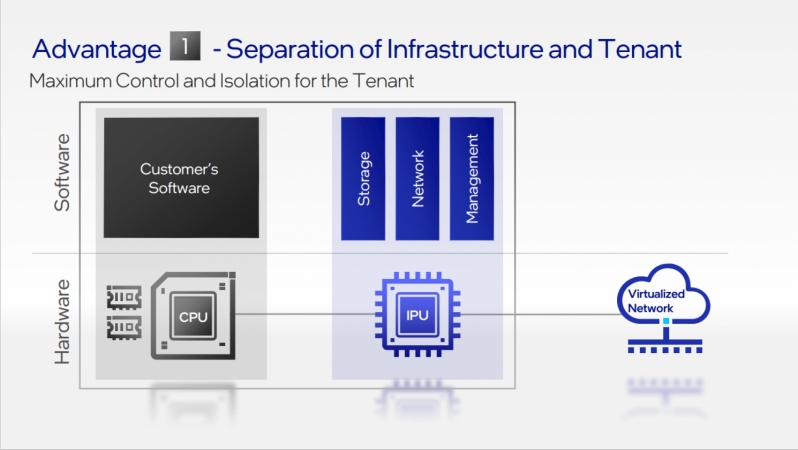

Advantage 1: The separation of infrastructure from tenants

By separating networking from client compute, cloud service providers can increase the security of their infrastructure by adding a layer of isolation to systems. Networking compute is handled by the IPU, which means that rogue clients cannot use their processors to wreak havoc within a server farm.

Another major benefit is that clients will not need to be concerned about their rented compute resources being consumed needlessly by their service provider’s networking solutions. Clients get more access to hardware, and service providers can keep their clients more robustly separated from the hardware that they do not want them to access. Â

IPUs can give cloud and communication service providers more control over their networks, and that is a good thing.Â

Â Â

Â

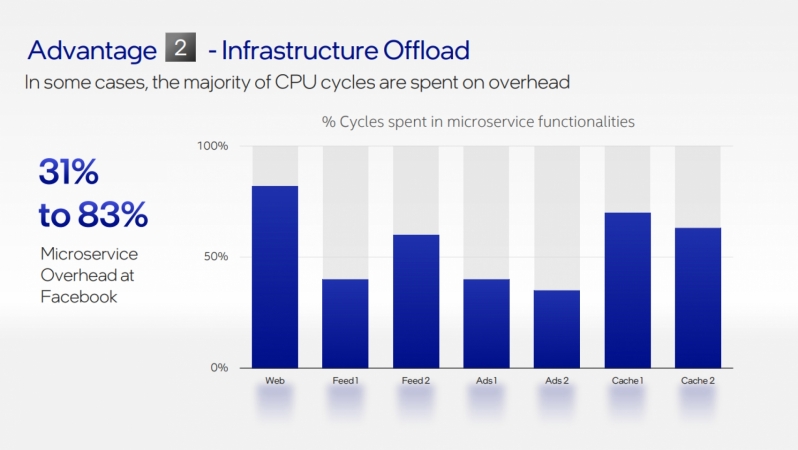

Advantage 2: Performance is key

If two things matter in the world of server infrastructure, its performance and power. IPUs can allow clients to offload work away from their processors and do that work more efficiently using dedicated acceleration units.Â

Intel has demonstrated that microservice communications overheads can consume 22-80% of server CPU cycles when working with clients like Google and Facebook. Using IPUs, Intel plans to liberate this lost performance and allow their Xeon server processors to be used for their intended purpose.Â

If implemented correctly, IPUs can be a huge win for clients. Using dedicated accelerators and programmed FPGAs, Intel’s IPUs will allow clients to complete their workloads more efficiently while allowing their server processors to complete more useful work. This allows clients to create more power-efficient networks and to get more useful work from their processors.Â

Â Â

Â

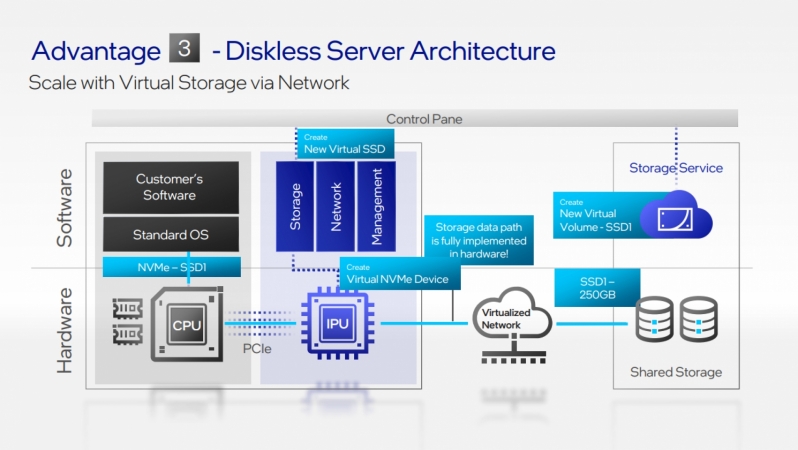

Advantage 3: The future of servers is diskless

Intel’s IPUs can enable the creation of diskless servers, allowing hyperscalers to reduce the cost of individual servers by reducing the need for per server storage solutions and any storage management workloads to be handled by CPUs.Â

CPU overhead can be reduced by offloading storage to a virtualised network of shared storage. This allows all storage to be networked eliminates any issues that may arise when specific servers have too much or too little usable storage.Â

Â Â

Â

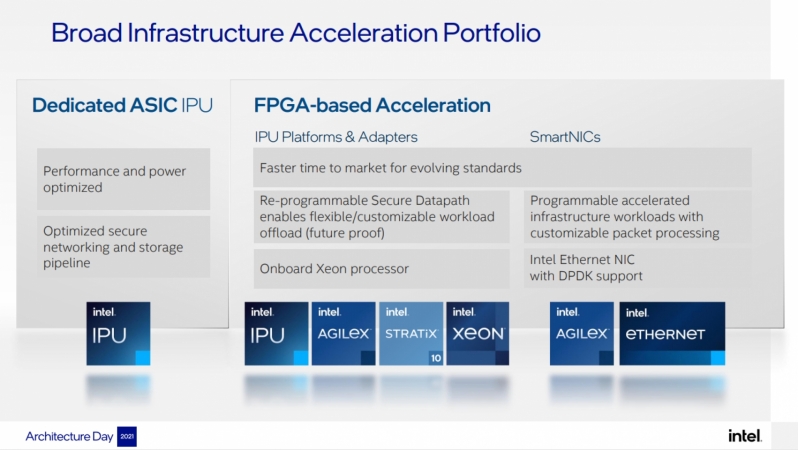

Intel’s work on IPUs is two-fold, as the company is working on dedicated ASIC IPUs that deliver optimised performance/optimised power draw and FPGA-based solutions that can evolve with changing market standards and be re-programmed to meet the needs of specific clients and custom workloads.Â

Both the ASIC and FPGA development paths are useful, granting Intel a large potential market. That said, Intel will see competition from the likes of Nvidia/Mellanox and custom solutions from large server companies like Amazon and Microsoft.Â

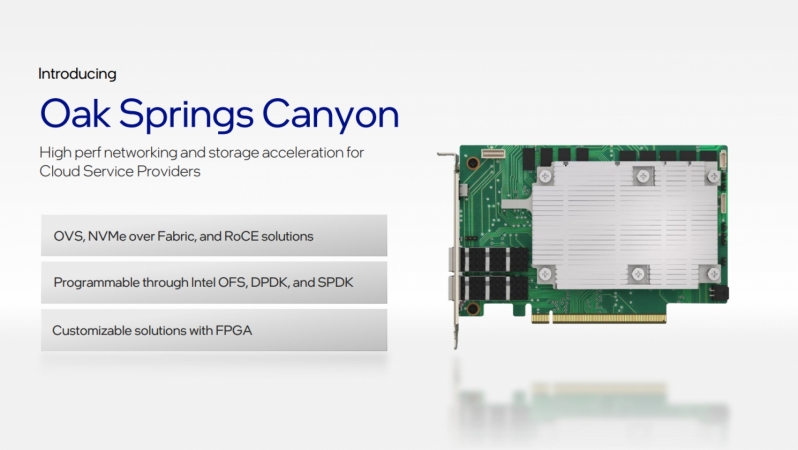

The Hardware – Oak Springs Canyon

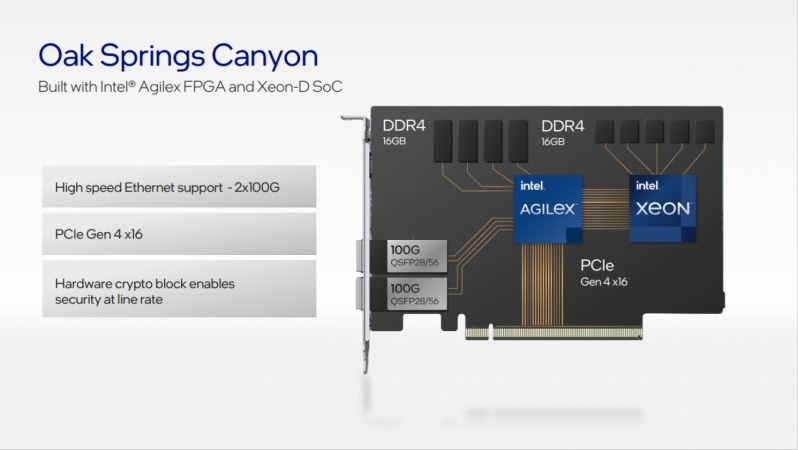

Oak Springs Canyon is a dual 100Gb IPU that features Xeon-D processor cores, Agilex FPGAs, PCIe 4.0 connectivity and a dedicated cryptography block that can secure networked communications.Â

With support for features like NVMe over fabric and RoCE v2, Oak Springs Canyon can be used to significantly reduced CPU overhead. Customers can control and customise this IPU using Intel’s Open FPGA Stack (OFS) and commonly used software development kits like DPDK and SPDK.Â

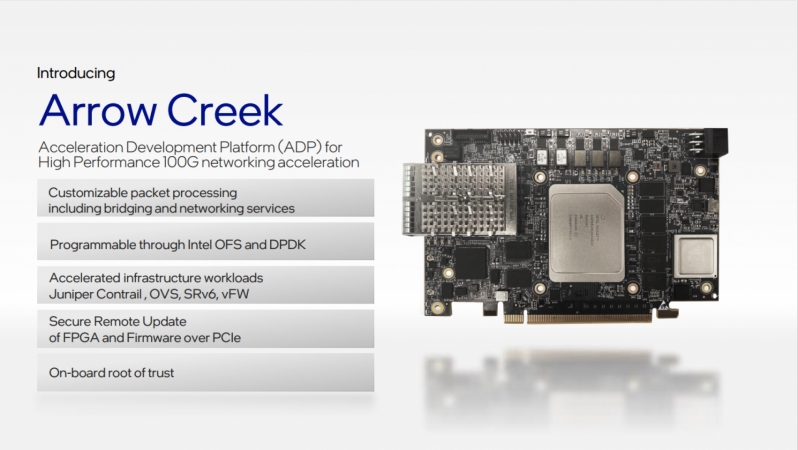

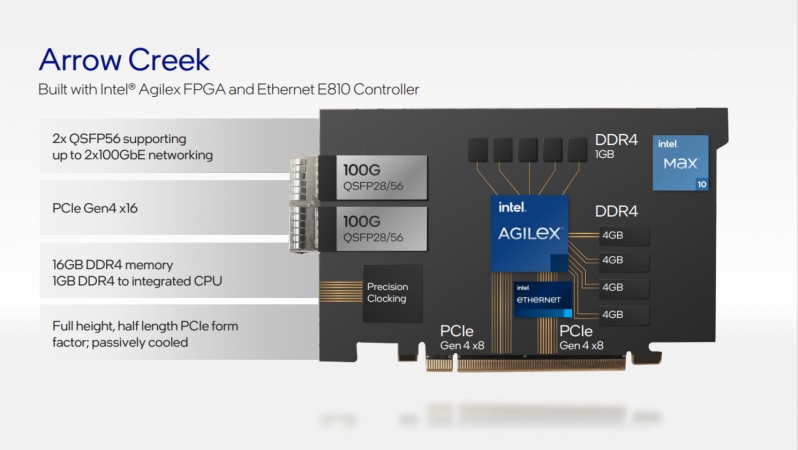

Arrow Creek is the codename of Intel’s N6000 Acceleration Platform, a 100Gb SmartNIC that’s designed to be used with Xeon-based servers. It features an Agilex FPGA, PCIe 4.0 connectivity, and is less versatile than Oak Springs Canyon. Since it lacks integrated compute cores outside of its FPGA, it is not considered by Intel as a full-on IPU, even if it has a lot of the same features.Â

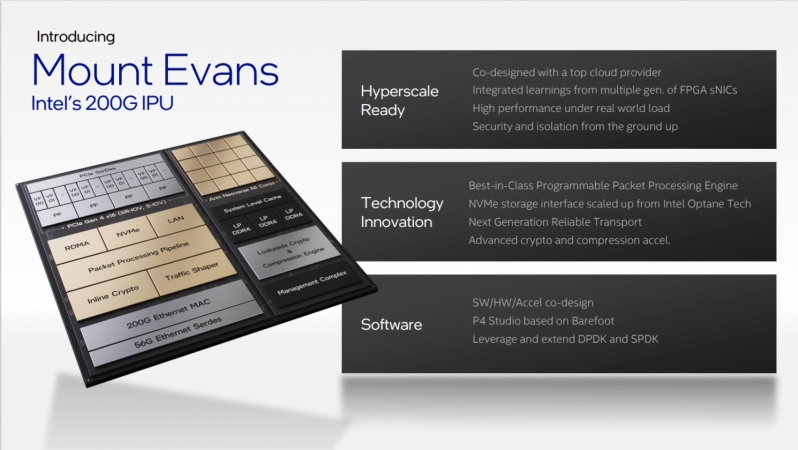

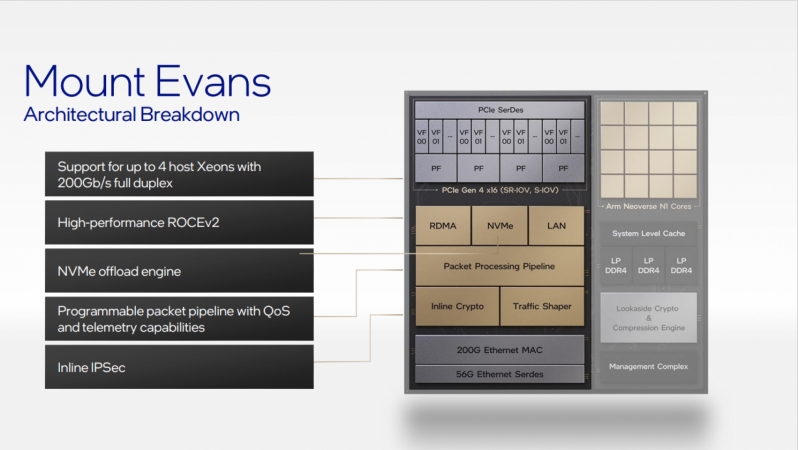

Mount Evans – Intel’s ASIC IPU

Mount Evans will be the first of Intel’s ASIC IPUs, integrating into silicon many features that proved to be incredibly useful when Intel developed its first FPGA-based SmartNICs.Â

By creating dedicated silicon for many tasks, Intel is able to dramatically increase the performance and power efficiency of Mount Evans over FPGA-based solutions. Compute-wise, Mount Evans features 16 Arm Neoverse N1 cores, three memory channels, features an lookaside crypto and compression engine.

Mount Evans will be Intel’s first 200G IPU, feature a “best in class” programmable packet processing engine and an accelerated NVMe storage interface. Mount Evens promises to be fast and versatile, which is good news for Intel. Â

Â

Â Â

Â

Intel’s promising a lot with its IPUs, and it is clear that Intel plans to continue investing in the ASIC and FPGA sides of the network acceleration market. We can expect to hear a lot more about IPUs in the future, especially as this technology starts getting used by customers. That said, Intel has competition within this market, both from bespoke solutions from Microsoft and Amazon, and competition from other networking companies like Mellanox (which is now part of Nvidia).Â

You can join the discussion on Intel’s IPUs on the OC3D Forums.Â