AMD targets an 30x increase in efficiency for its HPC chips by 2025

AMD’s targeting colossal performance and efficiency gains for its AI training and supercomputing hardware

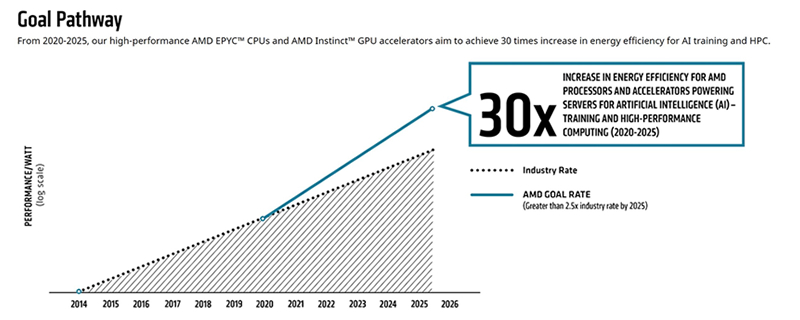

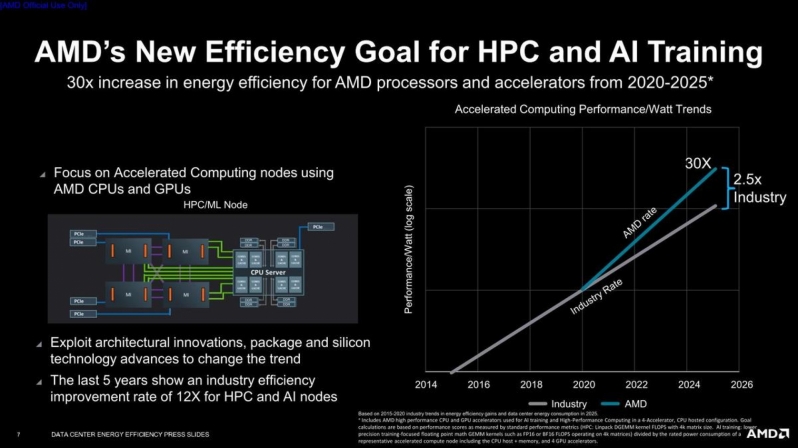

AMD today announced an ambitious set of performance targets for their future EPYC CPUs and Instinct Accelerators, pledging to deliver a 30x increase in energy efficiency by 2025. This rate of improvement is said to be 2.5x the industry aggregate for the past five years, showcasing AMD’s ambition to outpace the technological advancement of their competitors.Â

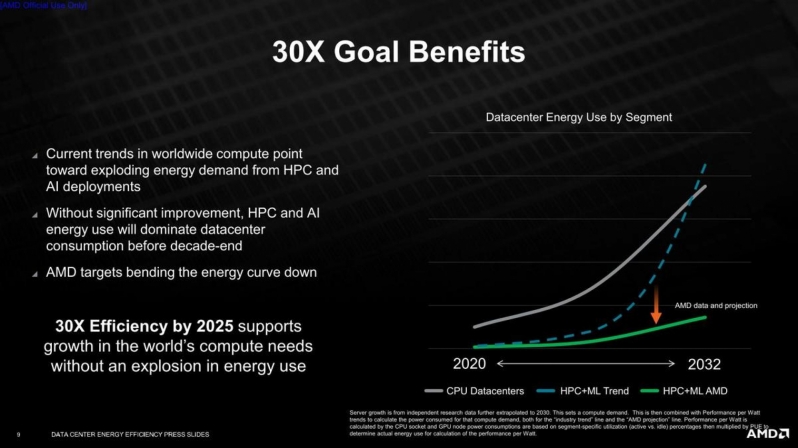

This lofty target stems from the industry’s increased processing requirements for AI accelerated compute nodes. Be it for AI training, weather prediction, or other large-scale supercomputer simulations, the world needs insane levels of compute performance. That said, the world also needs efficient supercomputers, and AMD’s targets would see the energy use of an HPC/AI training system decrease by over 96%.

AMD is exploring many performance-boosting and efficiency-increasing technologies in their future CPU, GPU, and AI accelerator designs. We have seen this recently with the launch of AMD’s Radeon RX 6000 series GPUs, which deliver staggering improvements in efficiency over their older 7nm RDNA and Radeon Vega predecessors. Similar improvements have also been seen with AMD’s Ryzen and EPYC CPU lineup. That said, AMD’s new 30x efficiency target is ambitious.Â

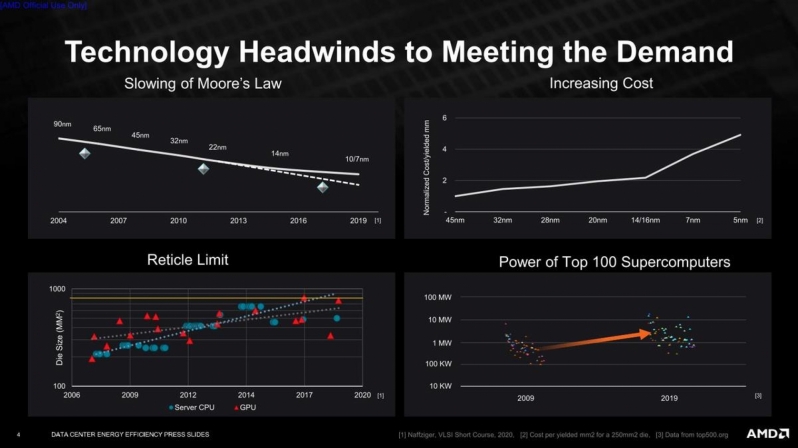

Part of AMD’s performance gains will come from the use of newer lithography nodes, while others will come from new technologies like AMD’s 3D chip stacking (such as AMD’s planned V-Cache tech for future Ryzen and EPYC processors). Beyond that, AMD’s improved performance will come from architectural innovations. AMD plans to achieve higher performance levels with their future Zen 4 and Zen 4 CPU cores and their planned CDNA 2 and CDNA 3 accelerator architectures.Â

AMD’s adopting an efficiency-first design approach to the HPC market, and that mindset will pay dividends elsewhere. This is especially true for the mobile PC market, where efficiency gains translate to increased battery life or boosted performance. For desktop PC users, efficiency gains will decrease the power draw of future systems and reduce the cooling requirements of high-end chips. Efficiency matters, and that’s why AMD’s new efficiency targets are worth taking notice of.Â

What follows is AMD’s Press Release for their planned 30x increase in energy efficiency for AMD processors and accelerators between 2020 and 2025.Â

PR – High-performance AMD EPYCâ„¢ CPUs and AMD Instinctâ„¢ accelerators target delivering unprecedented advance in energy efficiency for Artificial Intelligence training and Supercomputing applications

SANTA CLARA, Calif., Sept. 29, 2021 (GLOBE NEWSWIRE) — AMD (NASDAQ: AMD) today announced a goal to deliver a 30x increase in energy efficiency for AMD EPYC CPUs and AMD Instinct accelerators in Artificial Intelligence (AI) training and High Performance Computing (HPC) applications running on accelerated compute nodes by 2025.1 Accomplishing this ambitious goal will require AMD to increase the energy efficiency of a compute node at a rate that is more than 2.5x faster than the aggregate industry-wide improvement made during the last five years.2

Accelerated compute nodes are the most powerful and advanced computing systems in the world used for scientific research and large-scale supercomputer simulations. They provide the computing capability used by scientists to achieve breakthroughs across many fields including material sciences, climate predictions, genomics, drug discovery and alternative energy. Accelerated nodes are also integral for training AI neural networks that are currently used for activities including speech recognition, language translation and expert recommendation systems, with similar promising uses over the coming decade. The 30x goal would save billions of kilowatt hours of electricity in 2025, reducing the power required for these systems to complete a single calculation by 97% over five years.

“Achieving gains in processor energy efficiency is a long-term design priority for AMD and we are now setting a new goal for modern compute nodes using our high-performance CPUs and accelerators when applied to AI training and high-performance computing deployments,†said Mark Papermaster, executive vice president and CTO, AMD. “Focused on these very important segments and the value proposition for leading companies to enhance their environmental stewardship, AMD’s 30x goal outpaces industry energy efficiency performance in these areas by 150% compared to the previous five-year time period.â€

“With computing becoming ubiquitous from edge to core to cloud, AMD has taken a bold position on the energy efficiency of its processors, this time for the accelerated compute for AI and High Performance Computing applications,†said Addison Snell, CEO of Intersect360 Research. “Future gains are more difficult now as the historical advantages that come with Moore’s Law have greatly diminished. A 30-times improvement in energy efficiency in five years will be an impressive technical achievement that will demonstrate the strength of AMD technology and their emphasis on environmental sustainability.â€

Increased energy efficiency for accelerated computing applications is part of the company’s new goals in Environmental, Social, Governance (ESG) spanning its operations, supply chain and products. For more than twenty-five years, AMD has been transparently reporting on its environmental stewardship and performance. For its recent achievements in product energy efficiency, AMD was named to Fortune’s Change the World list in 2020 that recognizes outstanding efforts by companies to tackle society’s unmet needs.Â

Methodology

In addition to compute node performance/Watt measurements3, to make the goal particularly relevant to worldwide energy use, AMD uses segment-specific datacenter power utilization effectiveness (PUE) with equipment utilization taken into account.3 The energy consumption baseline uses the same industry energy per operation improvement rates as from 2015-2020, extrapolated to 2025. The measure of energy per operation improvement in each segment from 2020-2025 is weighted by the projected worldwide volumes4 multiplied by the Typical Energy Consumption (TEC) of each computing segment to arrive at a meaningful metric of actual energy usage improvement worldwide.

Dr. Jonathan Koomey, President, Koomey Analytics, said “The energy efficiency goal set by AMD for accelerated compute nodes used for AI training and High Performance Computing fully reflects modern workloads, representative operating behaviors and accurate benchmarking methodology.â€

1 Includes AMD high performance CPU and GPU accelerators used for AI training and High-Performance Computing in a 4-Accelerator, CPU hosted configuration. Goal calculations are based on performance scores as measured by standard performance metrics (HPC: Linpack DGEMM kernel FLOPS with 4k matrix size. AI training: lower precision training-focused floating point math GEMM kernels such as FP16 or BF16 FLOPS operating on 4k matrices) divided by the rated power consumption of a representative accelerated compute node including the CPU host + memory, and 4 GPU accelerators.

2 Based on 2015-2020 industry trends in energy efficiency gains and data center energy consumption in 2025.

3 The CPU socket and GPU node power consumptions are based on segment-specific utilization (active vs. idle) percentages then multiplied by PUE to determine actual energy use for calculation of the performance per Watt.

4 Total 2025 Server CPUs – 18.8 Mu (IDC – Q1 2021 Tracker), Total HPC CPUs – 3.3Mu (Hyperion- Q4 2020 Tracker), Total 2025 HPC GPUs 624k (Hyperion HPC Market Analysis, April ’21)

You can join the discussion on AMD targeting a 30x increase in efficiency for its HPC chips by 2025 on the OC3D Forums.Â

Â