Nvidia showcases a 4x Tesla V100 Volta system at Computex

Nvidia showcases a 4x Tesla V100 Volta system at Computex

Â

(Images from Tweaktown)

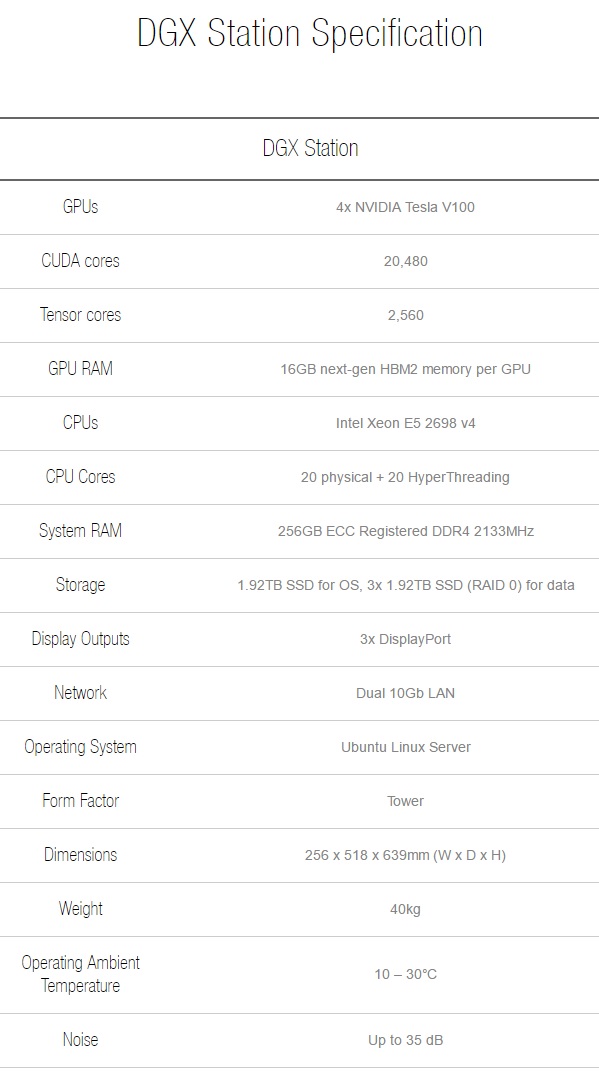

This server will contain a total of 60 TFLOPs of FP32 GPU performance, which does not even include the performance that can be provided by Nvidia’s dedicated Tensor cores for AI calculations. This is more performance that is available in any other quad-GPU system, which is an astounding achievement by Nvidia.Â

Â

Â

Attendees of Computex have been told that this system will cost a total of $69,000, making this system more expensive than most cars when Nvidia officially releases the system. Would you pay that much for a system without tempered glass or RGB lighting?Â

Â

You can join the discussion on Nvidia’s Tesla V100 powered system on the OC3D Forums.Â

Â

Nvidia showcases a 4x Tesla V100 Volta system at Computex

Â

(Images from Tweaktown)

This server will contain a total of 60 TFLOPs of FP32 GPU performance, which does not even include the performance that can be provided by Nvidia’s dedicated Tensor cores for AI calculations. This is more performance that is available in any other quad-GPU system, which is an astounding achievement by Nvidia.Â

Â

Â

Attendees of Computex have been told that this system will cost a total of $69,000, making this system more expensive than most cars when Nvidia officially releases the system. Would you pay that much for a system without tempered glass or RGB lighting?Â

Â

You can join the discussion on Nvidia’s Tesla V100 powered system on the OC3D Forums.Â

Â